Pajamas. A plate of bacon, orange juice, and toast. Coffee. The morning paper. Cubs lost today. Coaches and analysts talked about what went right, what went wrong; lots to think about. Price of olives remain high due to changes in weather and economic fallout, but should be temporary. There’s a famine half-way around the world, which sounds far, but it’s related to the olives, so it hits home, affects me. Paper in the office at work should have more details on that.

—

Homework assignment: Read 1984, discuss. Displays with cameras and microphones. Government power consolidated to one party, one ruler. Forbidden words and thoughts, making them illegal. Scoring our behavior with computers. Intriguing sci-fi. Can’t happen here though. Next up is Fahrenheit 451.

—

Watching TV news all day. Whole world’s gone to hell. Been showing that loud-mouth businessman all day, playing that clip, saying it’s not what people assume, the market’s just complicated. He just hates the poor. TV said so. Don’t need to hear anything else from him. I know all I need to know.

—

Been scrolling Twitter the last half hour. Price of milk skyrocketed, eggs will give you cancer now, they’re going to outlaw freedom, my favorite celebrity turned out to be a monster, new game came out, a plane crashed somewhere I think, a retweet of a GIF said a politician said something stupid and I hate him anyway, more news headlines about how unvaccinated eggs prevent cancer, some idiot blamed the milk prices on my party, guess car batteries are exploding, house prices somewhere reached an all-new high so I’m living at my parents forever, oh a cute puppy playing golf, birds aren’t real lol, good deal on a microwave from Temu, average IQ is dropping because of all those idiots out there, stupid rich people are ruining the world, damn another push notification to click, hold on…

We’re bombarded

News and information used to be part of a routine. A newspaper in the morning, a news hour at night, a conversation during the day. They were more than 140 characters, more than a hot take. Usually, anyway.

And people read books, magazines, comics. For education, for entertainment. Some people, anyway.

Time could be spent with a singular piece of content, processed in depth, mostly distraction-free.

In the world we’re in today, content is flung at us much like a monkey flings his <<REDACTED>>. We wake up to a screen full of push notifications, and it doesn’t get any better as the hours go by. We frantically doom-scroll on social media. We react, we retweet, we reply. We hold our breath and absorb the intensity of the world one shocking headline at a time, but rarely do we delve into what the articles actually have to say. Ain’t nobody got time for that.

When we’re bored, we check the latest posts, memes, current events, angry rants, misinformation, and hot takes. And when we’re busy, our pockets buzz as those posts scream for our ever-divided attention.

We’re drowning under the constant stream of urgent low-quality noise, wondering why we can’t shake that tingle of anxiety permeating the back of our brains.

We need to read

We need to learn how again.

We happily spend an hour going from tweet to tweet, TikTok to TikTok, but we scoff at spending 15 minutes on an article. We make the careless mistake of assuming a news headline has any relation to the content.

We assume we already know enough about a subject because we’ve seen it discussed on our feeds. We’re familiar with its presence.

Then we argue about it. Share it. Spread it. Internalize it. Build entire opinions around a half-read article, a couple of tweets, and misguided assumptions.

We assume too much of our own knowledge for a culture that basically stopped reading.

So read more! The end.

…

Okay, obviously it’s not that simple. You know how to read. You’ve read a book. You’ve read an article. You might even do that a lot! Every day, even! I’m clearly not talking simply about putting words in front of your face and feeding them through your inner-brain-mouth, claiming that’ll solve all the world’s problems, right? That’d be stupid.

So what am I really getting at here?

Let me tell you a story

One day, I was interviewed by the local news about AI and artificial emotion. I’m no expert, but they were just gathering some opinions and viewpoints from people off the street. Is this the future? Will it help, will it harm? What do you think?

So the reporter pulled me aside and asked me a series of questions. My opinion on the subject. My opinion again, but phrased differently. My response to what someone else said. A bit more elaboration. Perfect.

Later that night, I watched myself on TV stating an opinion that was the polar opposite of what I believed.

Editing and leading questions are a funny thing. What powerful tools for bending reality.

That experience stuck with me. It made me rethink every headline, every soundbite, every clip that passed by my eyes.

Your reality is someone’s fiction

A soundbite is a snippet. A headline is a pitch. A news article is a simplification. A TV segment is a story.

It’s all shaped, edited, and framed. Sometimes for clarity, sometimes for engagement, and sometimes to push a narrative — consciously or not. Taking it at face value doesn’t better you, but it does better them.

We all have our own biases, and we all have our own news sources — Fox News or the Huffington Post, something in-between. A social media news source. A cable news network. A YouTube channel. TikTok.

They’re all telling you a version of the truth.

Leaving things out, simplifying, exaggerating, taking things out of context, slanting toward a viewpoint.

And sometimes outright lying.

The Internet is full of profitable networks of actual fake news sources, fooling people across every political spectrum. But even trusted news sources twist, bend, and frame the truth.

That’s a problem. Because even if your go-to news source isn’t lying, it might be helping you lie to yourself — by only giving you the part of the picture you want to see.

We let our biases get in the way — believing what feels right, rejecting what doesn’t.

Feel smart, angry, validated, or victimized by the story you’ve been told? You’re probably going to go back for more.

And if it challenges you? You’ll probably dismiss it.

The world is deeply complex. Issues are nuanced. Subjects can’t be condensed into a single article, let alone a headline, a tweet. Yet most news — whether from a major outlet, a social media post, or even an AI summary — boils that complexity down into something easy to digest.

They have to. We’re not equipped to understand everything we read, and they need to retain viewers, keep us engaged.

We don’t understand, yet we share

We all love to feel smart. To feel right. Superior. Vindicated. We weigh in on complex topics with an assertive take, based on what we assume, what we saw, what our team is saying.

But let’s admit this truth: Most of us don’t know what the hell we’re talking about.

We read a headline somewhere. Maybe some bullet points. A short clip. We skimmed an article. It made sense to us. And we think we get it. It’s so simple, how could they not see what we see?

So we share, we debate, we spread. We butt heads, get into arguments, and broaden the divide. Assume they have ill intent. Assume stupidity. Assume malice.

They, who are forming their own takes off their misleading headlines, bullet points, short clips, and half-read articles.

All too often, neither side has truly investigated the subject, read enough, understood enough. And if we can’t stand up and accurately explain the nuance and complexity of the subject out loud to another person, if we must resort to pointing to tweets, some image we saw, some hot take, then maybe, just maybe, we shouldn’t be so confidently asserting our view.

That doesn’t mean stop talking. It means take the opportunity to dive deep into the subject. To slow down and think. To listen. To read. To work your way through the complexity of the issue before you add to the noise permeating the Internet.

Your news source’s asserted knowledge is not your knowledge. Hell, it may not even be theirs.

Are you knowledgeable on international trade agreements? Lumber futures? How inflation works? How taxes work? International regulations and their impact on businesses? Virology? Criminal justice reform? Climate science? Geopolitics?

Is the reporter? The magazine? The channel?

Maybe some. Probably not all.

The question is, how do we begin to understand complex subjects we have no experience in?

And how do we trust that we’re getting the right information?

Maybe AI can save us!

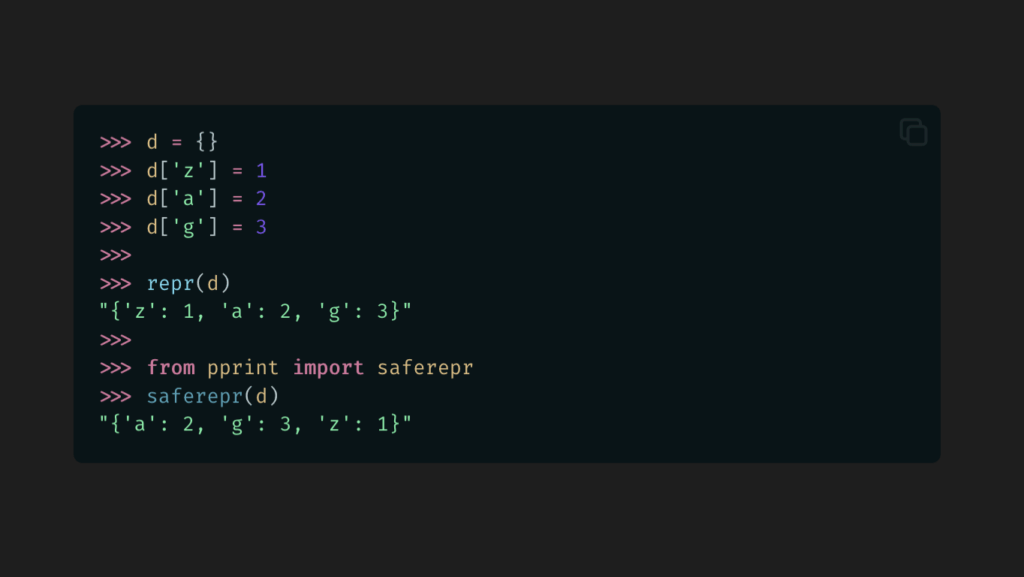

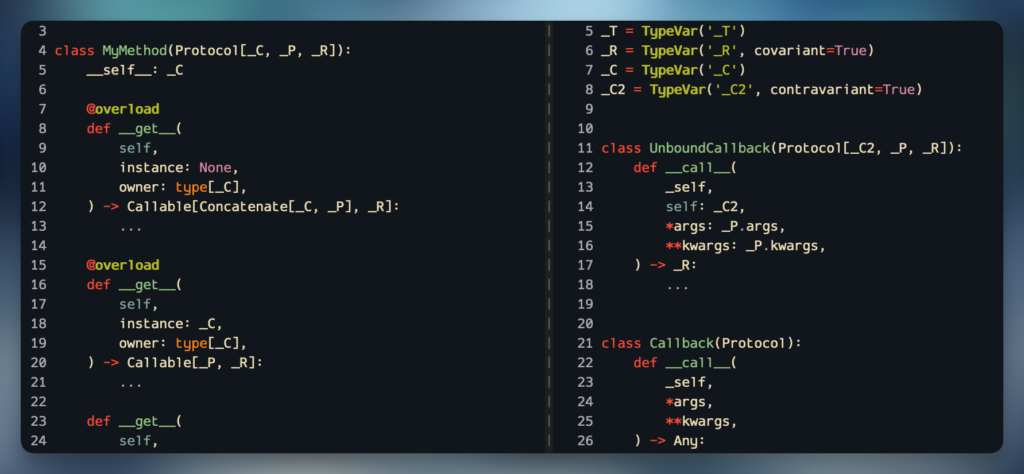

What people usually refer to as “AI” these days is what’s actually called a “LLM”, or “Large Language Model.” These are highly-complex systems that can generate text based on a prompt, or simplify large amounts of text into something more digestible.

LLMs can be really handy for summarizing and explaining an article, giving you the fine bullet points in a way you can understand.

“Read the article at <URL> and explain the key points to me as someone not an expert in the subject.” Bam! A complex subject simplified for you.

That works great until it doesn’t. You see, LLMs can lie to you by a process we call “hallucination.” It’s very technical, so let me give you an example you might relate to. Got a friend who’s pretty knowledgeable about some things but pretends to know everything? Ever ask them to explain something and, with great confidence, they give you an entirely wrong explanation? That.

But there are ways that software developers can deal with some of that, so it’s close enough to being a solved problem. Right?

Let me show you a neat thing you can do with LLMs and prompts. Here’s an article about some rain hitting California:

https://abcnews.go.com/US/wireStory/snow-freezing-rain-pummel-mid-atlantic-california-prepares-118723740

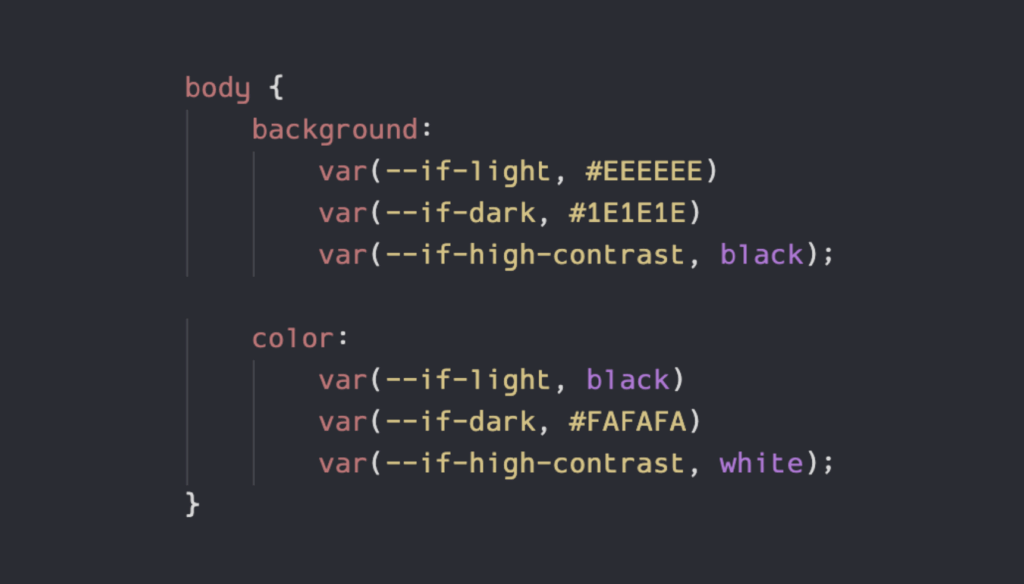

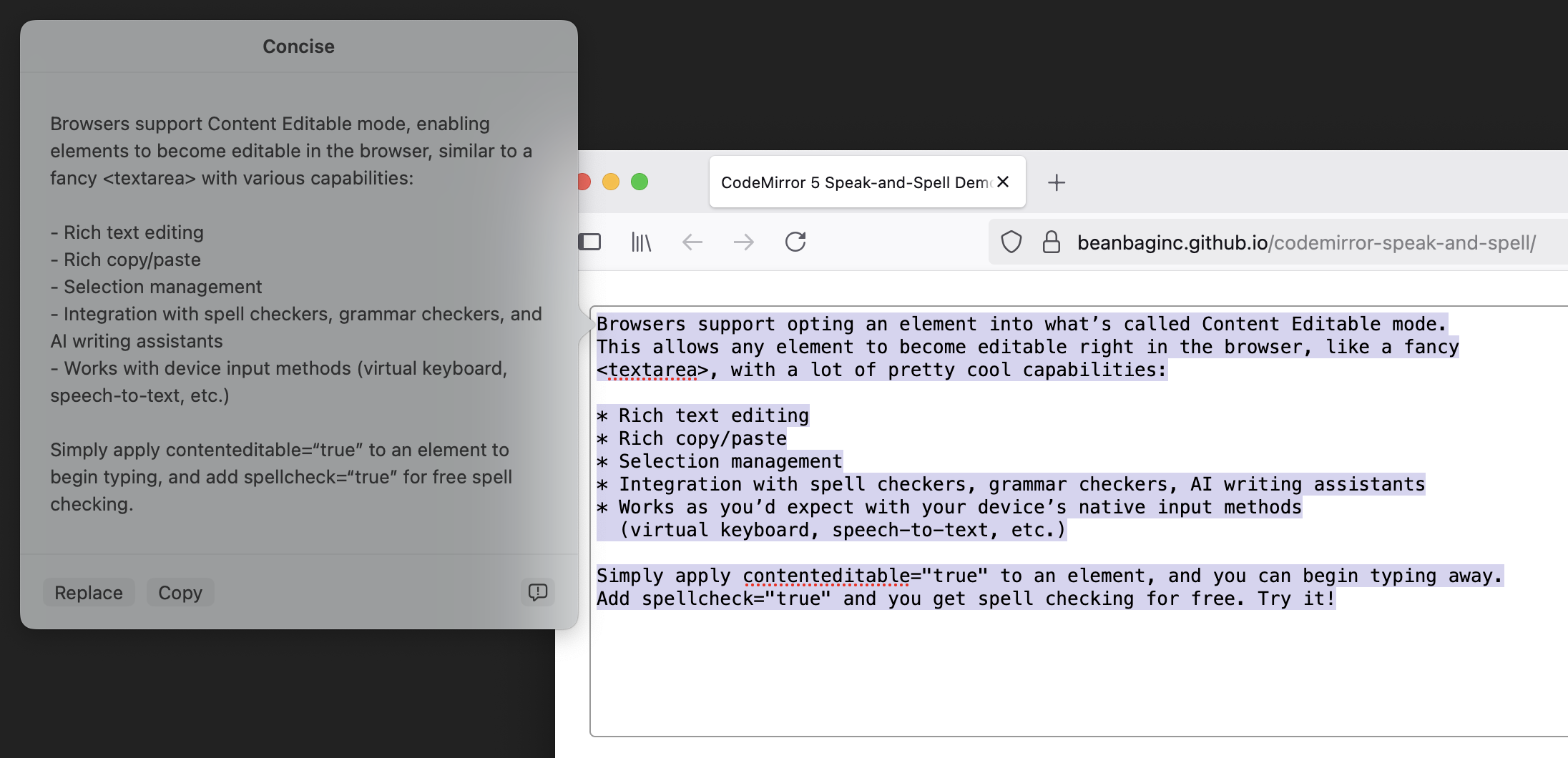

Imagine you’ve used someone else’s AI service to help you understand the news or complex subjects. What happens if they tweak that prompt just a little bit? Let’s find out!

Prompt 1: The neutral stance

We’ll start by getting the AI’s basic summary of the article:

Prompt: “Read the article and explain the key points to me as someone not an expert in the subject.”

Response:

- Big Winter Storm in the Mid-Atlantic – A powerful winter storm is hitting states like Virginia and West Virginia, bringing a mix of snow, sleet, and freezing rain. Some areas are seeing up to 14 inches of snow, making travel dangerous.

- Hazardous Conditions – Ice and snow are causing car accidents and road closures, and authorities are warning people to stay off the roads. Almost 12,000 people in Virginia have lost power due to the storm.

- Officials Taking Action – Authorities in both regions are preparing for the storms by urging residents to take precautions, whether staying off the roads in the East or preparing for possible floods in California.

Not bad!

But…

Prompt 2: A hard-right bias

What if the news site or service wants to skew the facts a bit? Using that same article, they could start to bias you in whichever way they want.

Don’t worry. I’ll show a hard-left bias next. Everyone gets to be angry!

Prompt: “Read the article and explain the key points to me as someone not an expert in the subject, but with a hard-right political slant.”

Response:

- Winter Chaos Strikes as Government Fails to Prepare – A massive winter storm is hammering the Mid-Atlantic, dumping up to 14 inches of snow in some areas. Instead of ensuring roads are safe, Democrat-led states like Virginia and West Virginia are struggling with road closures and power outages affecting 12,000 residents.

- Liberal Mismanagement Leaves Americans Vulnerable – As ice and freezing rain cause dangerous accidents, local governments are scrambling to respond. Once again, bureaucratic inefficiency is failing hard-working Americans who just want to get to work and keep their businesses open.

- California Braces for More Climate Alarmism – Out West, California is preparing for another so-called climate disaster as an “atmospheric river” is expected to bring heavy rain. Instead of investing in infrastructure to prevent floods, California politicians are more focused on pushing radical climate policies while residents face the real-world consequences of their failures.

Well that sure is a different take. (Emphasis not mine, by the way.)

Let’s see what the hard-left bias has to say about this storm.

Prompt 3: A hard-left bias

Prompt: “Read the article and explain the key points to me as someone not an expert in the subject, but with a hard-left political slant.”

Response:

- Extreme Weather Worsened by Climate Change – A major winter storm is battering the Mid-Atlantic, dumping up to 14 inches of snow, while California prepares for catastrophic flooding from an atmospheric river. Scientists have long warned that climate change is making storms more intense, yet conservatives continue to ignore the crisis.

- Republican-Led States Struggle to Handle the Crisis – Virginia and West Virginia are experiencing mass power outages (over 12,000 residents affected) and hazardous roads, exposing how Republican leadership has failed to invest in resilient infrastructure. Instead of prioritizing storm preparedness, GOP officials have cut funding for emergency response while corporations get tax breaks

- California Braces for Disaster as Republicans Block Climate Action – As an atmospheric river threatens deadly flooding, California is working to protect residents, but nationwide inaction on climate change has left the state vulnerable. Republican obstruction of environmental policies has weakened our ability to prevent these disasters, putting lives at risk.

I bet you gravitate toward one of those more than the others.

And this is the point. This doesn’t actually require you to be using an AI/LLM to summarize the news. You may be reading news today, tomorrow, or in the future that is nothing more than an AI bias slant of the actual news!

Nothing is as it seems. Or at least, you should assume it isn’t.

That’s why I have rules.

My personal rules for reading the news

That interview I mentioned, where I was asked a question that was edited and aired, contradicting my own views? It led me down a path of thinking hard about the news I consumed every day. I began to distrust the quotes, the conclusions. I got curious about what they were saying in opposing news publications. I disagreed with those stances, but it was enlightening.

Over time, I began to draft some rules for myself. They apply to newspapers, online news, TV news segments, tweets, videos, blogs, and anything with a headline.

- Read the headline. Does it trigger an emotional reaction, confirm or reflect a bias (whether yours or someone else’s), or ask a question?

If yes, dismiss the headline and open the article.

Rationale: Headlines are attention-grabbing advertisements for content, not information sources.

- Read the content in its entirety. Does it trigger an emotional reaction, confirm or reflect a bias, simplify a complex issue, fail to cite any legitimate sources, or use short quotes from the individual/company that’s the target of the article (without the target’s context provided) to lead to an opinion?

If yes, it’s a blog post/opinion piece/hit piece/one-sided fragment of a larger story, and another source from another viewpoint is necessary.

Rationale: Most news stories are stories, designed to keep viewers on the site short-term and long-term through whatever means is most effective. A complete picture of the facts is the quickest way to deter most readers, who won’t stick around long enough for that, and many wouldn’t want to.

- Is it about anything scientific, technical, political, legal, or otherwise complex?

If yes, and you’re reading a news site of any kind, then you don’t have the full picture.

Go learn more about the subject, find the full quotes, read the research paper. Otherwise, you’re still uninformed, and probably feeling an emotion about it (see above).

Rationale: Same as above, really. You’re not getting the full picture, just a summary, and summaries tend to be incomplete and biased. You can do better.

- Determine the general bias of the news source. Is it left-biased? Right? Center? All the above?

Cool. Anyway, go read more articles about the subject.

Read it from CNN, BBC, Fox News, Huffington Post. Find out what people are saying. Understand the spectrum and the angles. Find the research papers, the professional discussions, the deep dives. Get familiar with the complexity.

Then form your own opinion. “I don’t understand this well enough” is a perfectly valid opinion.

Rationale: News are written by individuals (unless they’re written by AI). Regardless of the bias of the publication, you’re usually dealing with people who may impose their own bias or lack of knowledge on a subject. They, you, and their editors aren’t going to think the same way everyone else does, and even if they’re trying to be legit and diverse, they’re going to miss something.

If you care at all about the topic, if you want to discuss the topic, go learn about more of the angles and the slants, because that’s an important part of building a true understanding of any topic.

That’s verbatim from my note I keep handy when I’m sorting out the latest complexities of the world causing my head to spin.

So let’s go over the important points

Read.

Read the article, not just the headline.

Read the research paper.

Read what you agree with — and what you don’t.

Read books. Long articles. Deep dives.

Read a ChatGPT explanation. A Wikipedia article. An opinion piece — with its counterpoint.

Think about how much time you’ve spent doom-scrolling, reacting, stressing. Now imagine what you could learn instead.

Know that every news story is just a fragment. Approach with eyes open.

And when you do read — whatever you read — work to understand. Know you don’t know everything. And if you don’t fully understand it? Don’t spread it.

The world is noisy, and angry. Misinformation is easy. Critical thinking is much harder, but it’s necessary.

Dig deeper. Get comfortable with complexity.

This is how we engage. How we fight injustice. How we keep from drowning.

This is how we do better.

Thanks for reading.