A crash course on Python function signatures and typing

I’ve been doing some work on Review Board and our utility library Typelets, and thought I’d share some of the intricacies of function signatures and their typing in Python.

We have some pretty neat Python typing utilities in the works to help a function inherit another function’s types in their own *args and **kwargs without rewriting a TypedDict. Useful for functions that need to forward arguments to another function. I’ll talk more about that later, but understanding how it works first requires understanding a bit about how Python sees functions.

Function signatures

Python’s inspect module is full of goodies for analyzing objects and code, and today we’ll explore the inspect.signature() function.

inspect.signature() is used to introspect the signature of another function, showing its parameters, default values, type annotations, and more. It can also aid IDEs in understanding a function signature. If a function has __signature__ defined, it will reference this (at least in CPython), and this gives highly-dynamic code the ability to patch signatures at runtime. (If you’re curious, here’s exactly how it works).

There are a few places where knowing the signature can be useful, such as:

- Automatically-crafting documentation (Sphinx does this)

- Checking if a callback handler accepts the right arguments

- Checking if an implementation of an interface is using deprecated function signatures

Let’s set up a function and take a look at its signature.

>>> def my_func(

... a: int,

... b: str,

... /,

... c: dict[str, str] | None,

... *,

... d: bool = False,

... **kwargs,

... ) -> str:

... ...

...

>>> import inspect

>>> sig = inspect.signature(my_func)

>>> sig

<Signature (a: int, b: str, /, c: dict[str, str] | None, *,

d: bool = False, **kwargs) -> str>

>>> sig.parameters

mappingproxy(OrderedDict({

'a': <Parameter "a: int">,

'b': <Parameter "b: str">,

'c': <Parameter "c: dict[str, str] | None">,

'd': <Parameter "d: bool = False">,

'kwargs': <Parameter "**kwargs">

}))

>>> sig.return_annotation

<class 'str'>

>>> sig.parameters.get('c')

<Parameter "c: dict[str, str] | None">

>>> 'kwargs' in sig.parameters

True

>>> 'foo' in sig.parameters

False

Pretty neat. Pretty useful, when you need to know what a function takes and returns.

Let’s see what happens when we work with methods on classes.

>>> class MyClass:

... def my_method(

... self,

... *args,

... **kwargs,

... ) -> None:

... ...

...

>>> inspect.signature(MyClass.my_method)

<Signature (self, *args, **kwargs) -> None>

Seems reasonable. But…

>>> obj = MyClass()

>>> inspect.signature(obj.my_method)

<Signature (*args, **kwargs) -> None>

self disappeared!

What happens if we do this on a classmethod? Place your bets…

>>> class MyClass2:

... @classmethod

... def my_method(

... cls,

... *args,

... **kwargs,

... ) -> None:

... ...

...

>>> inspect.signature(MyClass2.my_method)

<Signature (*args, **kwargs) -> None>

If you guessed it wouldn’t have cls, you’d be right.

Only unbound methods (definitions of methods on a class) will have a self parameter in the signature. Bound methods (callable methods bound to an instance of a class) and classmethods (callable methods bound to a class) don’t. And this makes sense, if you think about it, because this signature represents what the call accepts, not what the code defining the method looks like.

You don’t pass a self when calling a method on an object, or a cls when calling a classmethod, so it doesn’t appear in the function signature. But did you know that you can call an unbound method if you provide an object as the self parameter? Watch this:

>>> class MyClass:

... def my_method(self) -> None:

... self.x = 42

...

>>> obj = MyClass()

>>> MyClass.my_method(obj)

>>> obj.x

42

In this case, the unbound method MyClass.my_method has a self argument in its signature, meaning it takes it in a call. So, we can just pass in an instance. There aren’t a lot of cases where you’d want to go this route, but it’s helpful to know how this works.

What are bound and unbound methods?

I briefly touched upon this, but:

- Unbound methods are just functions. Functions that are defined on a class.

- Bound methods are a function where the very first argument (

selforcls) is bound to a value.

Binding normally happens when you instantiate a class, but you can do it yourself through any function’s __get__():

>>> def my_func(self):

... print('I am', self)

...

>>> class MyClass:

... ...

...

>>> obj = MyClass()

>>> method = my_func.__get__(MyClass)

>>> method

<bound method my_func of <__main__.MyClass object at 0x100ea20d0>>

>>> method.__self__

<__main__.MyClass object at 0x100ea20d0>

>>> inspect.ismethod(method)

True

>>> method()

I am <__main__.MyClass object at 0x100ea20d0>

my_func wasn’t even defined on a class, and yet we could still make it a bound method tied to an instance of MyClass.

You can think of a bound method as a convenience over having to pass in an object as the first parameter every time you want to call the function. As we saw above, we can do exactly that, if we pass it to the unbound method, but binding saves us from doing this every time.

You’ll probably never need to do this trick yourself, but it’s helpful to know how it all ties together.

By the way, @staticmethod is a way of telling Python to never make an unbound method into a bound method when instantiating the object (it stays a function), and @classmethod is a way of telling Python to bind it immediately to the class it’s defined on (and not rebind when instantiating an object from the class).

How do you tell them apart?

If you have a function, and you don’t know if it’s a standard function, a classmethod, a bound method, or an unbound method, how can you tell?

- Bound methods have a

__self__attribute pointing to the parent object (and inspect.ismethod() will beTrue). - Classmethods have a

__self__attribute pointing to the parent class (and inspect.ismethod() will beTrue). - Unbound methods are tricky:

- They do not have a

__self__attribute. - They might have a

selforclsparameter in the signature, but they might not have those names (and other functions may define them). - They should have a

.in its__qualname__attribute. This is a full.-based path to the method, relative to the module. - Splitting

__qualname__, the last component would be the name. The previous component won’t be<locals>(but if<locals>is found, you’re going to have trouble getting to the method). - If the full path is resolvable, the parent component should be a class (but it might not be).

- You could… resolve the parent module to a file and walk its AST and find the class and method based on

__qualname__. But this is expensive and probably a bad idea for most cases.

- They do not have a

- Standard functions are the fallback.

Since unbound methods are standard functions that are just defined on a class, it’s difficult to really tell the difference. You’d have to rely on heuristics and know you won’t always get a definitive answer.

(Interesting note: In Python 2, unbound methods were special kinds of functions with a __self__ that pointed to the class they were defined on, so you could easily tell!)

The challenges of typing

Functions can be typed using Callable[[<param_types>], <return_type>].

This is very simplistic, and can’t be used to represent positional-only arguments, keyword-only arguments, default arguments, *args, or **kwargs. For that, you can define a Protocol with __call__:

from typing import Protocol

class MyCallback(Protocol):

def __call__(

self, # This won't be part of the function signature

a: int,

b: str,

/,

c: dict[str, str] | None,

*,

d: bool = False,

**kwargs,

) -> str:

...

Type checkers will then treat this as a Callable, effectively. If we take my_func from the top of this post, we can assign to it:

cb: MyCallback = my_func # This works

What if we want to assign a method from a class? Let’s try bound and unbound.

class MyClass:

def my_func(

self,

a: int,

b: str,

/,

c: dict[str, str] | None,

*,

d: bool = False,

**kwargs,

) -> str:

return '42'

cb2: MyCallback = MyClass.my_func # This fails

cb3: MyCallback = MyClass().my_func # This works

What happened? It’s that self again. Remember, the unbound method has self in the signature, and the bound method does not.

Let’s add self and try again.

class MyCallback(Protocol):

def __call__(

_proto_self, # This won't be part of the function signature

self: Any,

a: int,

b: str,

/,

c: dict[str, str] | None,

*,

d: bool = False,

**kwargs,

) -> str:

...

cb2: MyCallback = MyClass.my_func # This works

cb3: MyCallback = MyClass().my_func # This fails

What happened this time?! Well, now we’ve matched the unbound signature with self, but not the bound signature without it.

Solving this gets… verbose. We can create two versions of this: Unbound, and Bound (or plain function, or classmethod):

class MyUnboundCallback(Protocol):

def __call__(

_proto_self, # This won't be part of the function signature

self: Any,

a: int,

b: str,

/,

c: dict[str, str] | None,

*,

d: bool = False,

**kwargs,

) -> str:

...

class MyCallback(Protocol):

def __call__(

_proto_self, # This won't be part of the function signature

a: int,

b: str,

/,

c: dict[str, str] | None,

*,

d: bool = False,

**kwargs,

) -> str:

...

# These work

cb4: MyCallback = my_func

cb5: MyCallback = MyClass().my_func

# These fail correctly

cb7: MyUnboundCallback = my_func

cb8: MyUnboundCallback = MyClass().my_func

cb9: MyCallback = MyClass.my_func

This means we can use union types (MyUnboundCallback | MyCallback) to cover our bases.

It’s not flawless. Depending on how you’ve typed your signature, and the signature of the function you’re setting, you might not get the behavior you want or expect. As an example, any method with a leading self-like parameter (basically any parameter coming before your defined signature) will type as MyUnboundCallback, because it might be! Remember, we can turn any function into a bound method for an arbitrary class using __get__. That may or may not matter, depending on what you need to do.

What do I mean by that?

def my_bindable_func(

x,

a: int,

b: str,

/,

c: dict[str, str] | None,

*,

d: bool = False,

**kwargs,

) -> str:

return ''

x1: MyCallback = my_bindable_func # This fails

x2: MyUnboundCallback = my_bindable_func # This works

x may not be named self, but it’ll get treated as one, because if we do my_bindable_func.__get__(some_obj), then some_obj will be bound to x and callers won’t have to pass anything to x.

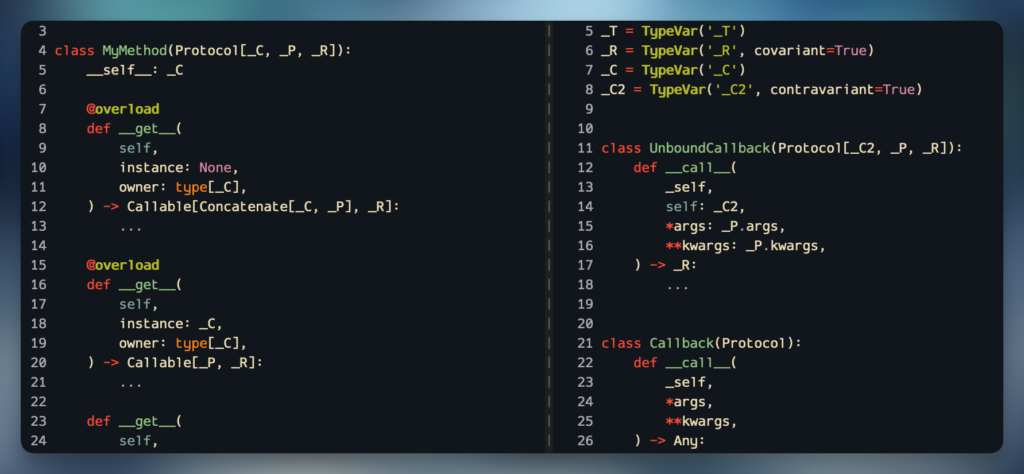

Okay, what if you want to return a function that can behave as an unbound method (with self) that can become an unbound method (with __get__)? We can mostly do it with:

from typing import ParamSpec, TypeVar, cast, overload

_C = TypeVar('_C')

_R_co = TypeVar('_R_co', covariant=True)

_P = ParamSpec('_P')

class MyMethod(Protocol[_C, _P, _R_co]):

__self__: _C

@overload

def __get__(

self,

instance: None,

owner: type[_C],

) -> Callable[Concatenate[_C, _P], _R_co]:

...

@overload

def __get__(

self,

instance: _C,

owner: type[_C],

) -> Callable[_P, _R_co]:

...

def __get__(

self,

instance: _C | None,

owner: type[_C],

) -> (

Callable[Concatenate[_C, _P], _R_co] |

Callable[_P, _R_co]

):

...

Putting it into practice:

def make_method(

source_method: Callable[Concatenate[_C, _P], _R_co],

) -> MyMethod[_C, _P, _R_co]:

return cast(MyMethod, source_method)

class MyClass2:

@make_method

def my_method(

self,

a: int,

b: str,

/,

c: dict[str, str] | None,

*,

d: bool = False,

**kwargs,

) -> str:

return True

# These work!

MyClass2().my_method(1, 'x', {}, d=True)

MyClass2.my_method(MyClass2(), 1, 'x', {}, d=True)

That’s a fair bit of work, but it satisfies the bound vs. unbound methods signature differences. If we inspect these:

>>> reveal_type(MyClass2.my_method)

Type of "MyClass2.my_method" is "(MyClass2, a: int, b: str, /,

c: dict[str, str] | None, *, d: bool = False, **kwargs: Unknown) -> str"

>>> reveal_type(MyClass2().my_method)

Type of "MyClass2().my_method" is "(a: int, b: str, /,

c: dict[str, str] | None, *, d: bool = False, **kwargs: Unknown) -> str"

And those are type-compatible with the MyCallback and MyUnboundCallback we built earlier, since the signatures match:

# These work

cb10: MyUnboundCallback = MyClass.my_method

cb11: MyCallback = MyClass().my_method

# These fail correctly

cb12: MyUnboundCallback = MyClass().my_method

cb13: MyCallback = MyClass.my_method

And if we wanted, we could modify that ParamSpec going into the MyMethod from make_method() and that’ll impact what the type checkers expect during the call.

Hopefully you can see how this can get complex fast, and involve some tradeoffs.

I personally believe Python needs a lot more love in this area. Types for the different kinds of functions/methods, better specialization for Callable, and some of the useful capabilities from TypeScript would be nice (such as Parameters<T>, ReturnType<T>, OmitThisParameter<T>, etc.). But this is what we have to work with today.

My teachers said to always write a conclusion

What have we learned?

- Python’s method signatures are different when bound vs. unbound, and this can affect typing.

- Unbound methods aren’t really their own thing, and this can lead to some challenges.

- Any method can be a bound method with a call to

__get__(). Callableonly gets you so far. If you want to type complex functions, write aProtocolwith a__call__()signature.- If you want to simulate a bound/unbound-aware type, you’ll need

Protocolwith__get__().

I feel like I just barely scratched the surface here. There’s a lot more to functions, working with signatures, and challenges around typing than I covered here. We haven’t talked about how you can rewrite signatures on the fly, how annotations are represented, what functions look under the hood, or how bytecode behind functions are mutable at runtime.

I’ll leave some of that for future posts. And I’ll have more to talk about when we expand Typelets with the new parameter inheritance capabilities. It builds upon a lot of what I covered today to perform some very neat and practical tricks for library authors and larger codebases.

What do you think? Did you learn something? Did I get something wrong? Have you done anything interesting or unexpected with signatures or typing around functions you’d like to share? I want to hear about it!

A crash course on Python function signatures and typing Read More »