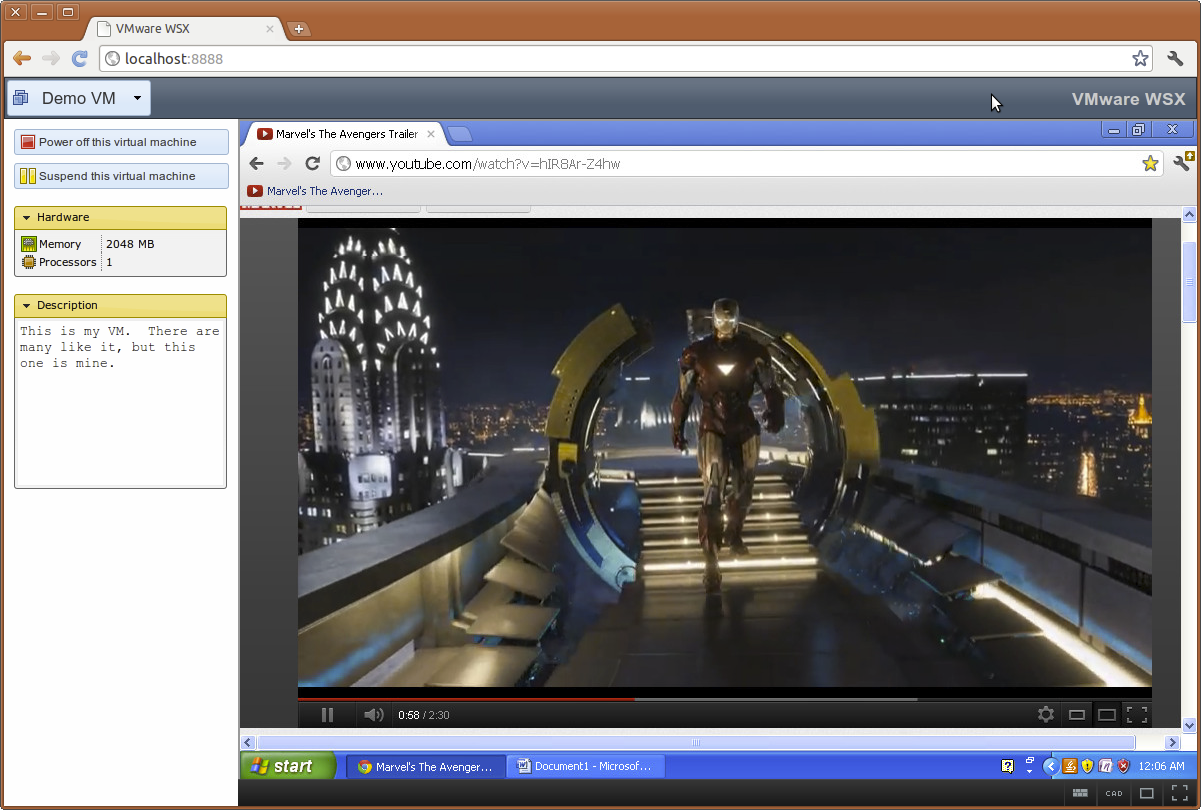

VMware WSX TP2: Faster, Shinier, and Less Broken

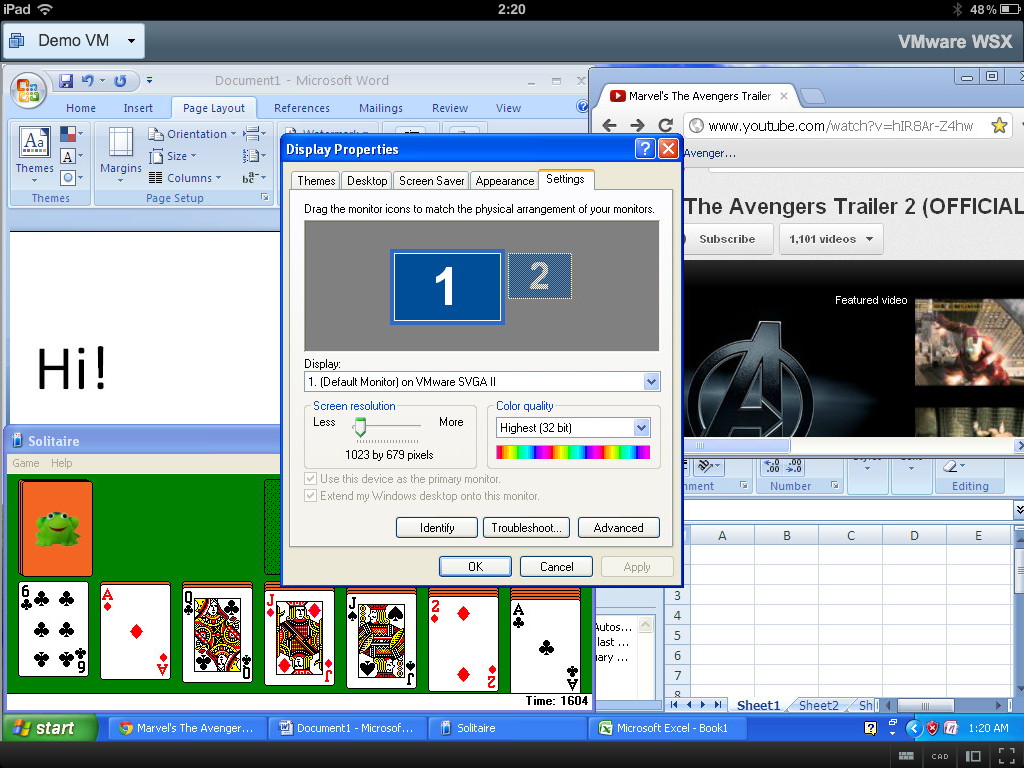

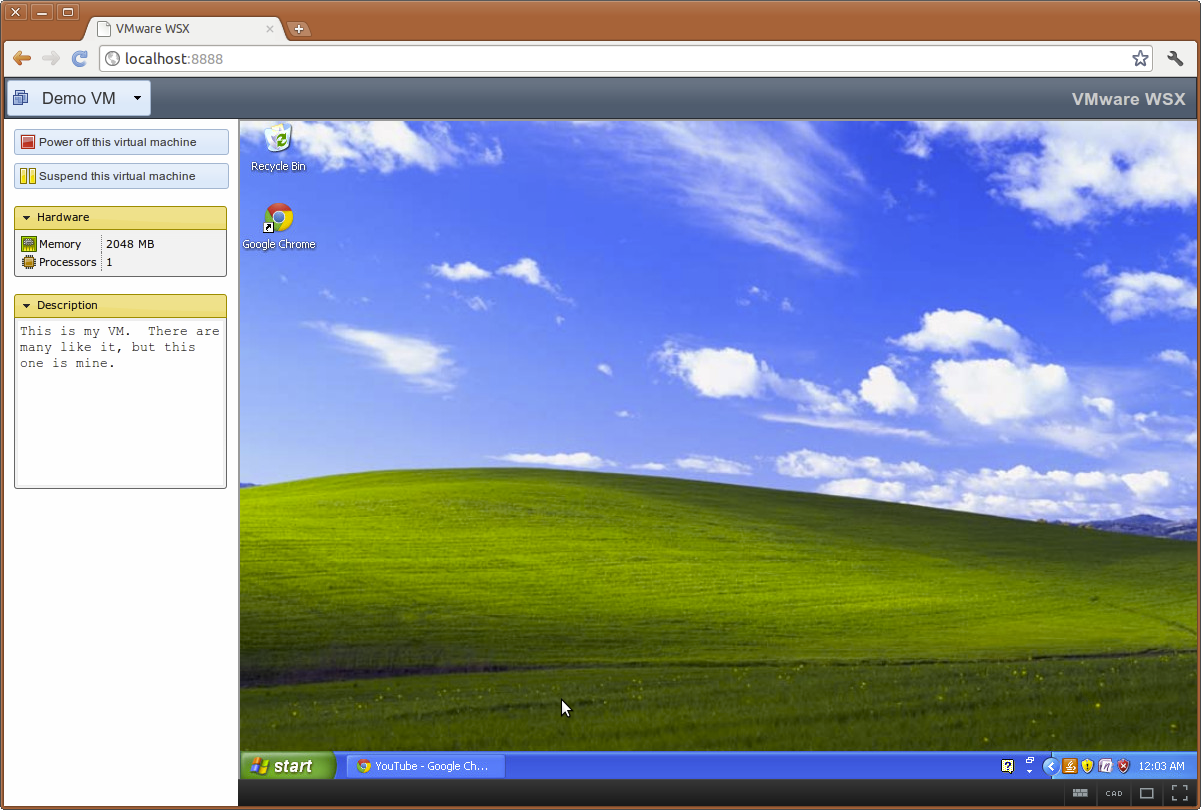

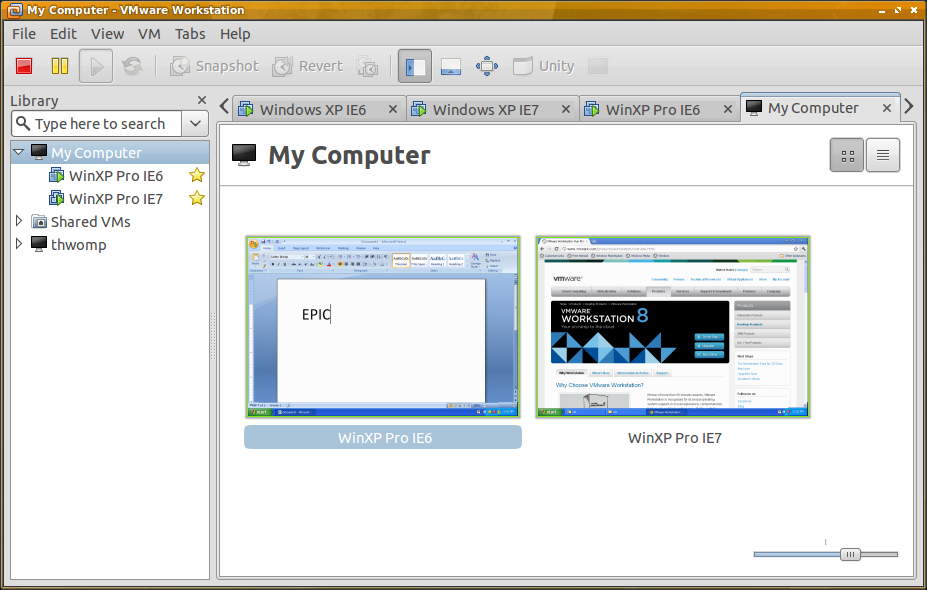

A few months back, I introduced VMware WSX, a new product I’ve been developing at VMware to access virtual machines in any modern web browser without plugins. The response blew me away. News spread to Ars Technica, Engadget, Windows IT Pro, InfoWorld, and many other publications and sites.

I’m happy to announce that we’ve released another build today: WSX Tech Preview 2. You can get it on the Workstation Technology Preview 2012 forum. Just click “Downloads” and download either the Windows or Linux installers.

Like the first Tech Preview, this is a prototype of what’s to come. I’m actively working on a rewrite that will prove much more reliable, with better compatibility and room for future growth. We have a pretty good release, here, though, and I’d like to break down what all has changed.

Windows Installer

The first preview of WSX was only for Linux. I work primarily on Linux, and as such, this was my priority. While we weren’t able to get a proper Windows build ready for TP1, we now have it for TP2. So Windows users, if that’s been holding you back, give it a try now!

Better Performance

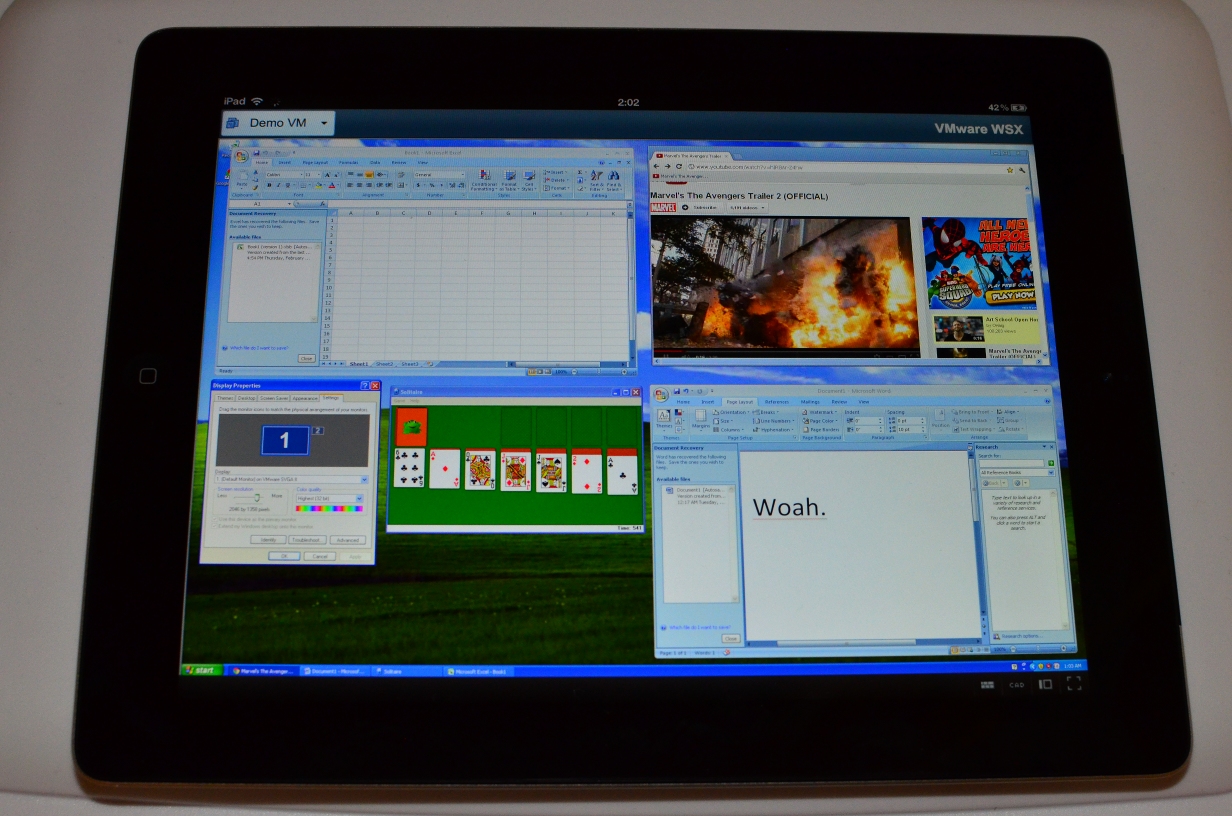

We’ve optimized the rendering to the screen. This should result in faster updates, making things much smoother, particularly on iOS. We’ve added some mobile (and specifically iOS) rendering improvements, and they really help. As we continue to evolve WSX, expect the experience on mobile to only get better.

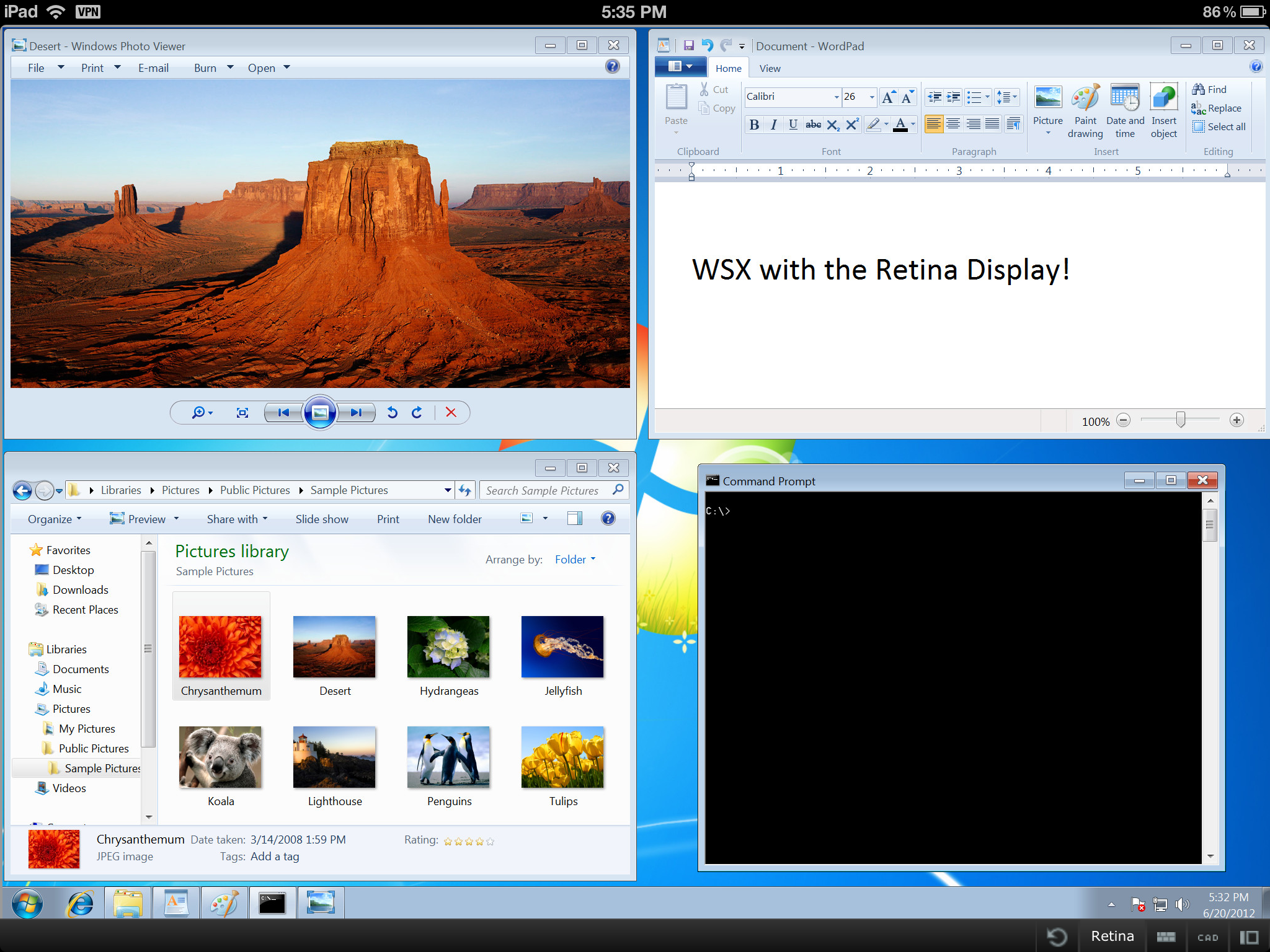

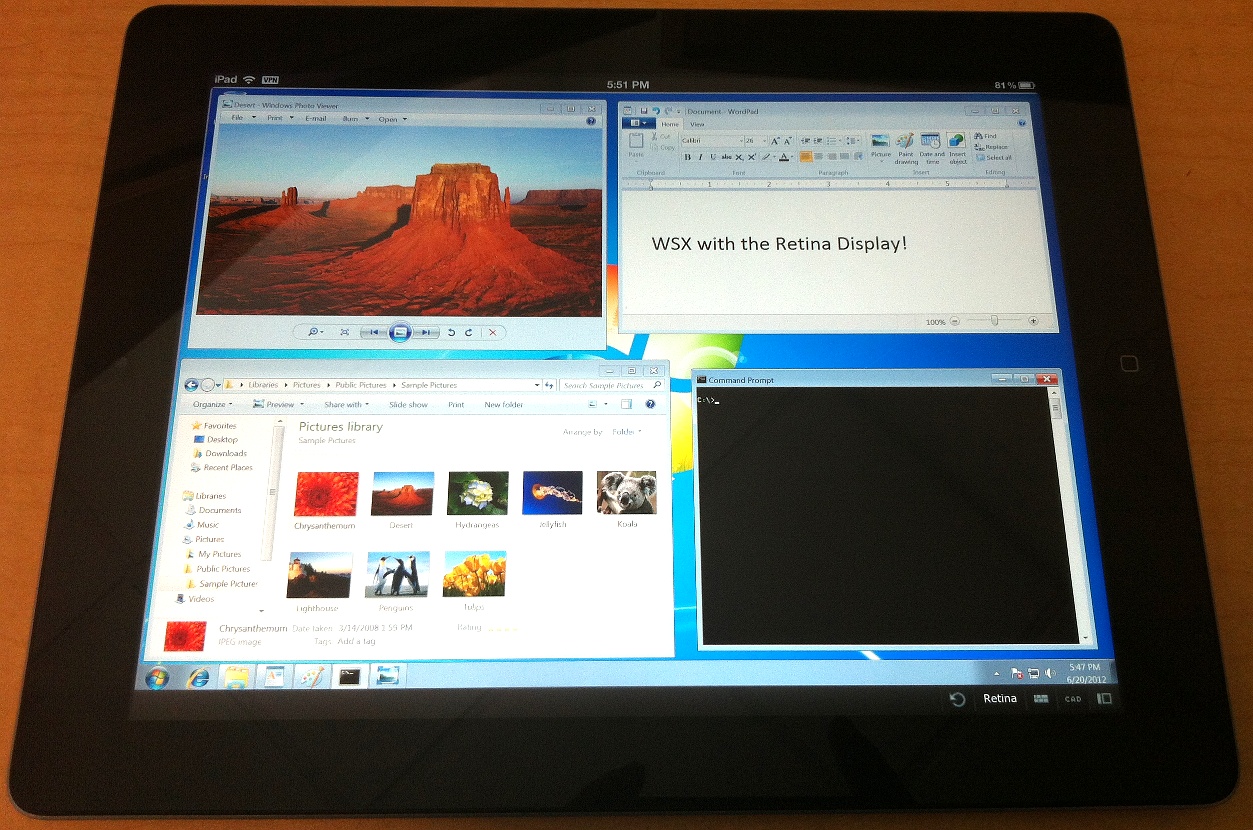

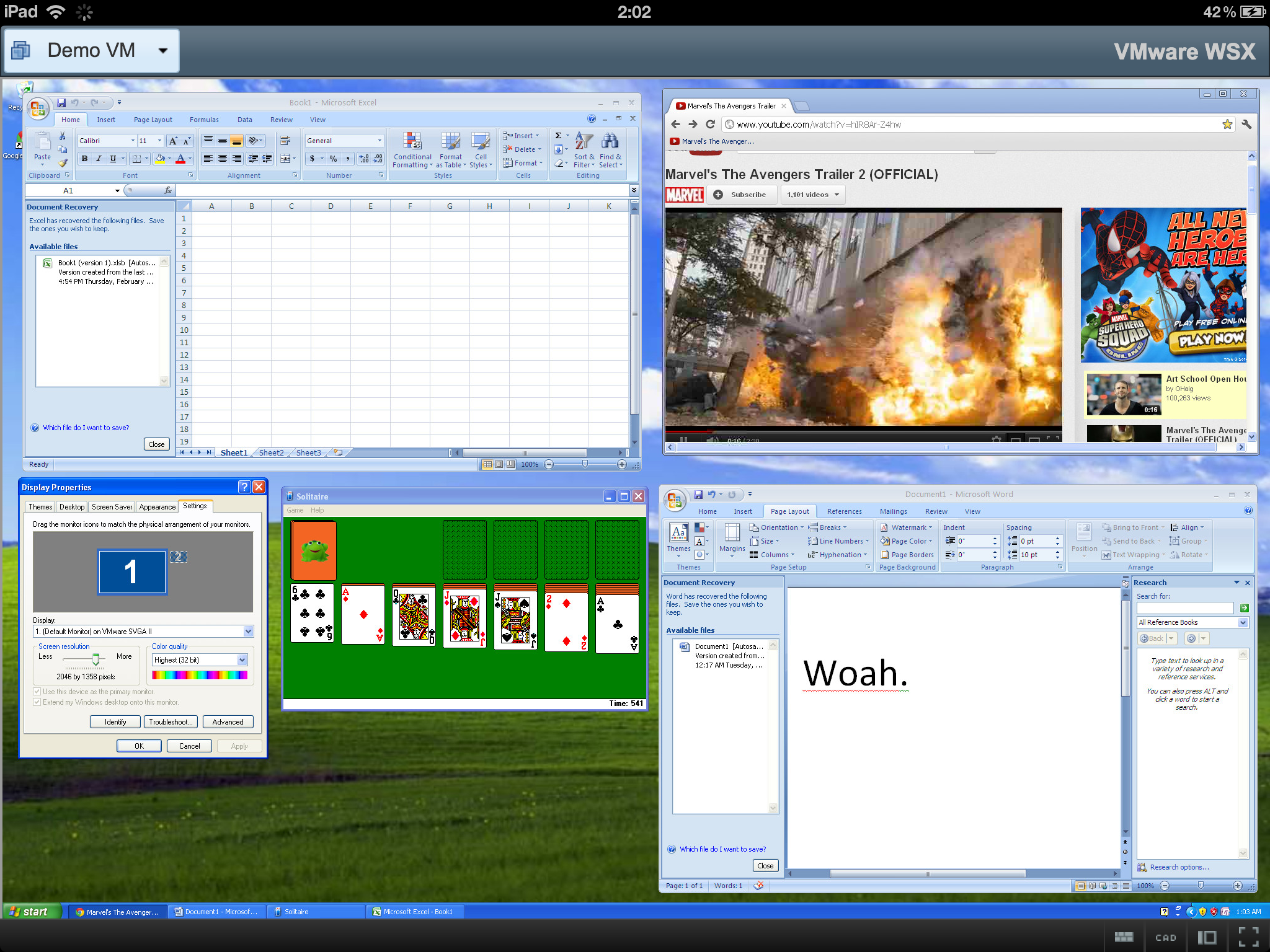

Retina on iOS

When you go to WSX on an iOS device, you’ll see some changes. First of all, the icons will be more crisp and Retina-friendly. Second, there’s a new “Retina” button for switching the VM into retina mode. I blogged about this a while back, and it’s finally ready to be played with. (Note: There are some occasional rendering bugs to work out.)

But wait! MacBook Pros have Retina displays too!

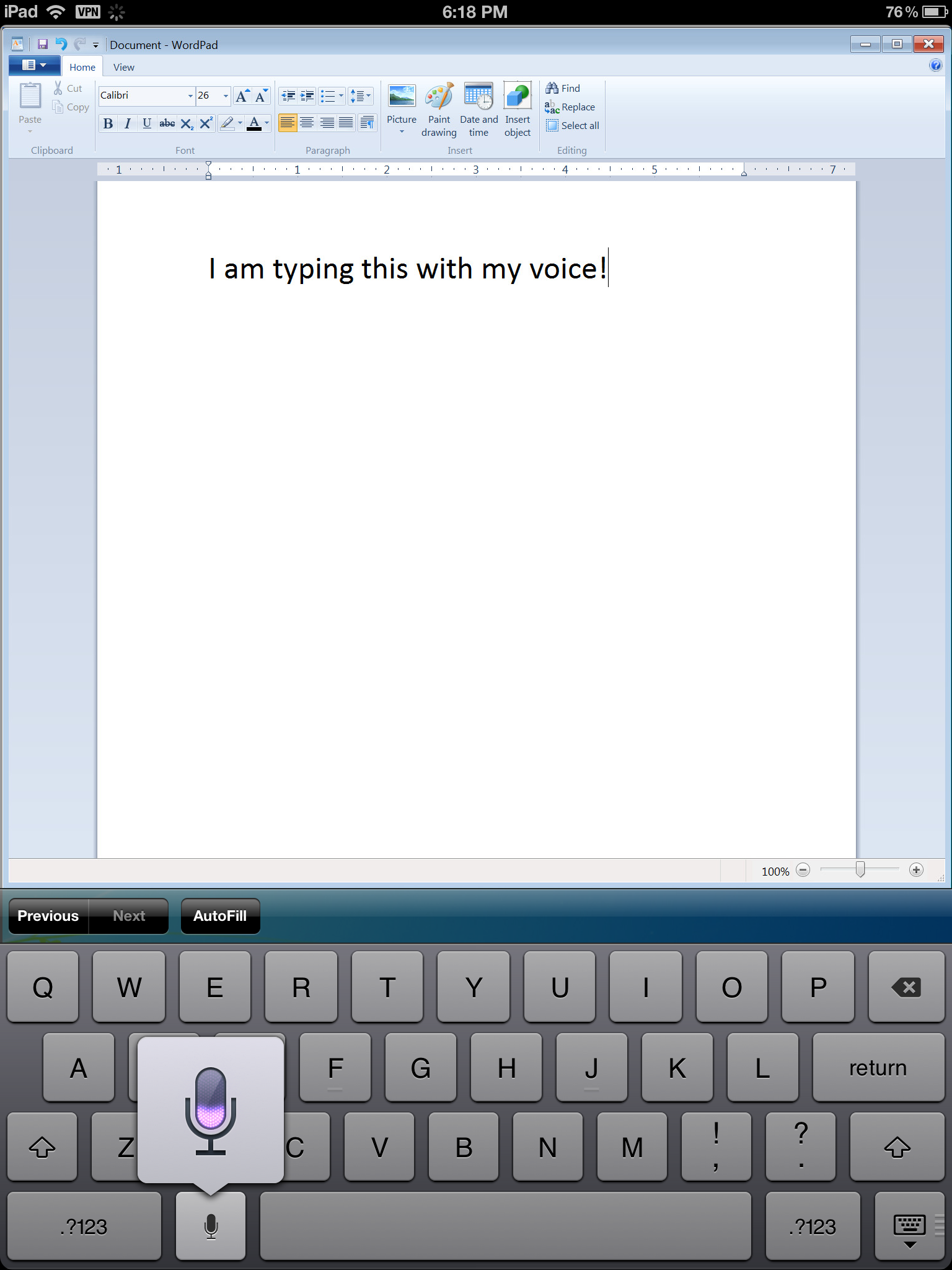

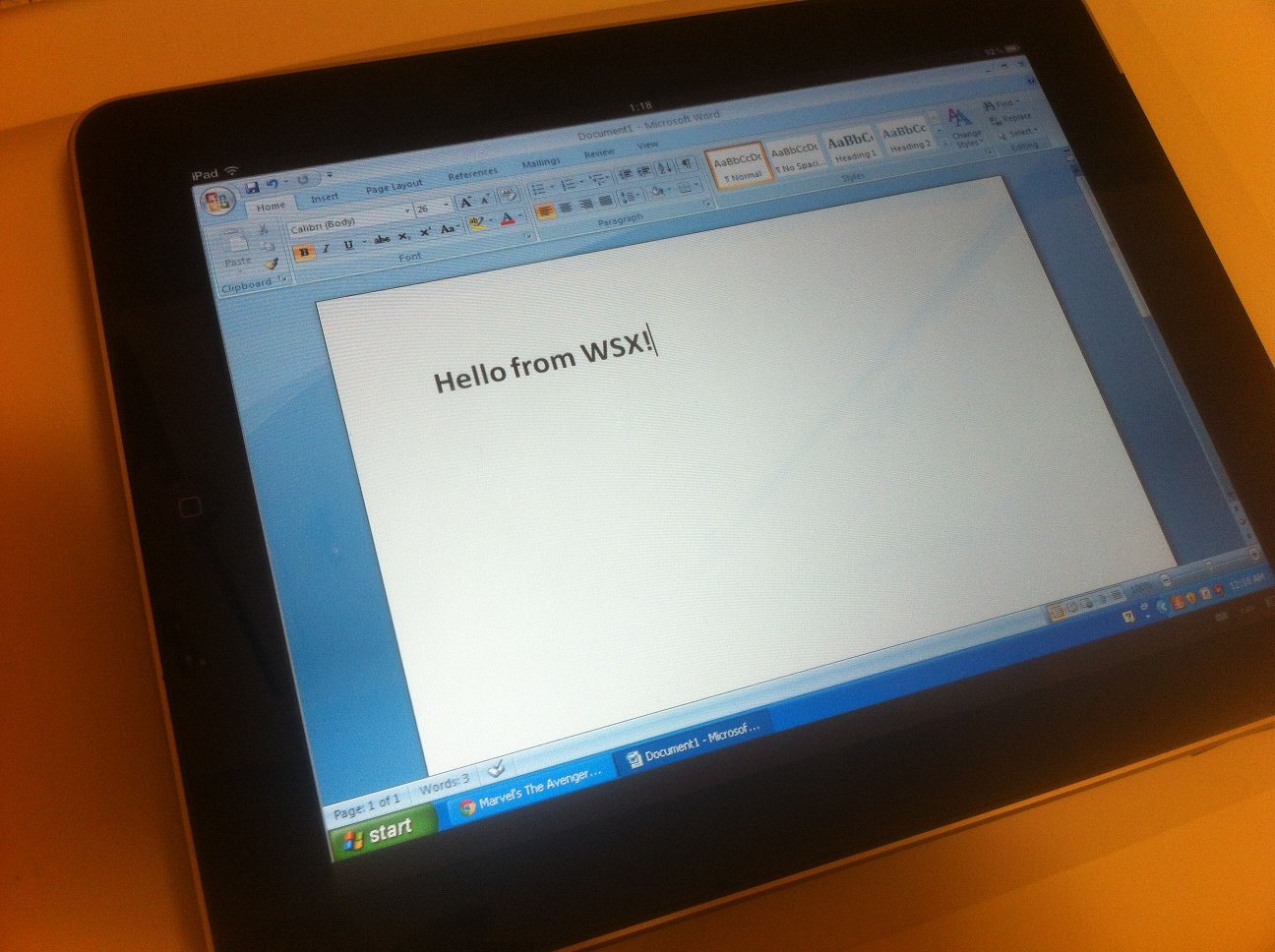

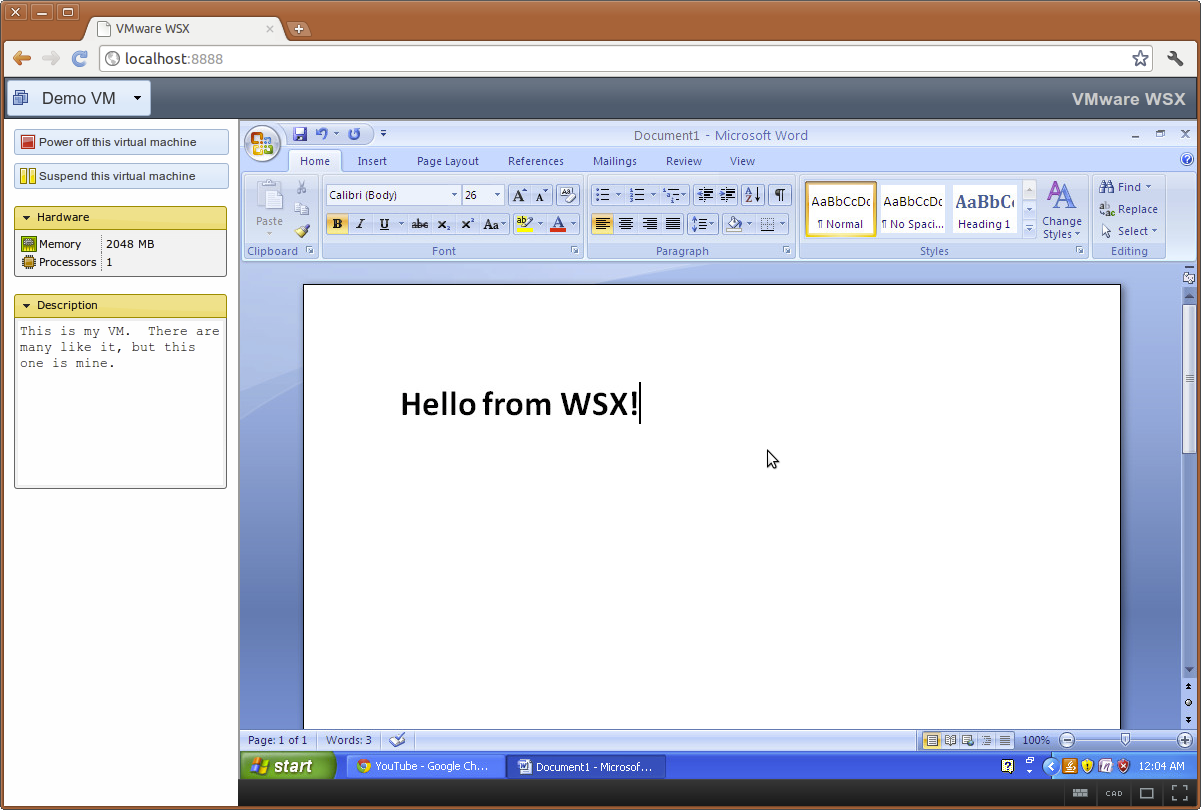

Speech-to-Text on iOS

You know that little microphone button on the iOS keyboard on the latest iPad/iPhones? Pressing that allows you to “type” with your voice on native applications. Now, we support it as well.

Open up an application in the VM (Word, for example), pop up the keyboard, and hit the microphone. Begin speaking, and your words will appear automatically in your application as if you were typing them. It’s fun!

Beginnings of Android Compatibility

I will warn you, this is not fully baked yet.

The main problem with Android is that most browsers, especially the stock Android browser, do not support the modern web features we need. WebSockets and fast Canvas rendering being a couple of the key issues. Those that do, like Firefox, suffer from other glaring rendering problems that make for a bad experience.

Work is being done here, though, and if you’re running on an Android browser without WebSockets, we now attempt to use a Flash shim that communicates with the server. This makes WSX semi-usable on the Android browser. However, it’s not fast, and there are input problems. In time, I hope to improve this.

Better iOS Compatibility

- Input is much improved. Capital letters and most special symbols now work. There are issues still with international characters, though. Backspace key repeats now work, too.

- Various fixes for things like question dialogs not appearing, username fields having auto-capitalize/correct on, and other little issues here and there.

Better Feedback

- When a login attempt fails, you’ll see an error saying what went wrong, instead of seeing it wait forever.

- We show a spinner now when attempting to connect to the VM’s display. This provides some feedback, especially over slower connections, and mimics what we do with Workstation.

- Attempting to change the power state of a VM now shows a spinner on the appropriate power button. So, press Power On, and the button will spin until it begins to power on.

- If the connection to a server drops, you’ll be notified and taken back to the Home page.

UI Improvements

- Login pages aren’t so bare anymore.

- The giant useless margin on the left-hand side of most pages have been removed.

- Added a logout link! (One of our most heavily requested features.)

Bug Fixes

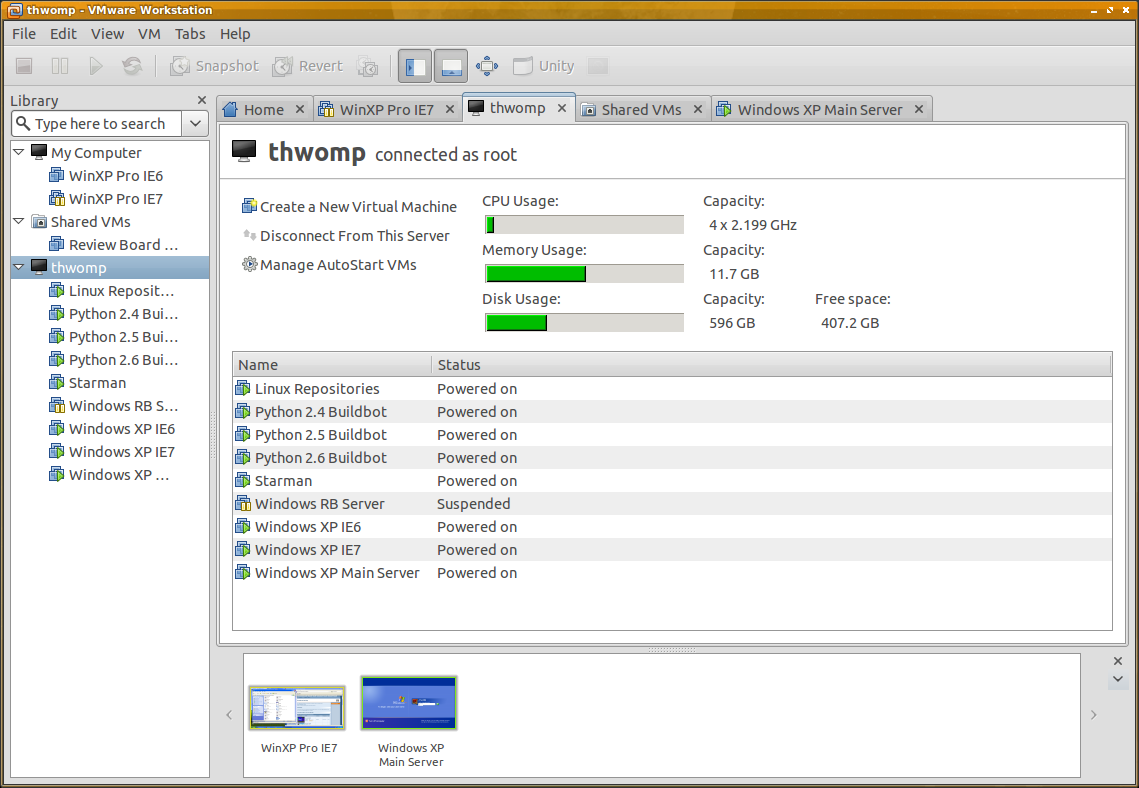

- Connecting to vSphere no longer totally fails. Many users were having some problems with that, and I’m happy to say it should work better now. It’s still not meant to handle thousands of VMs, though.

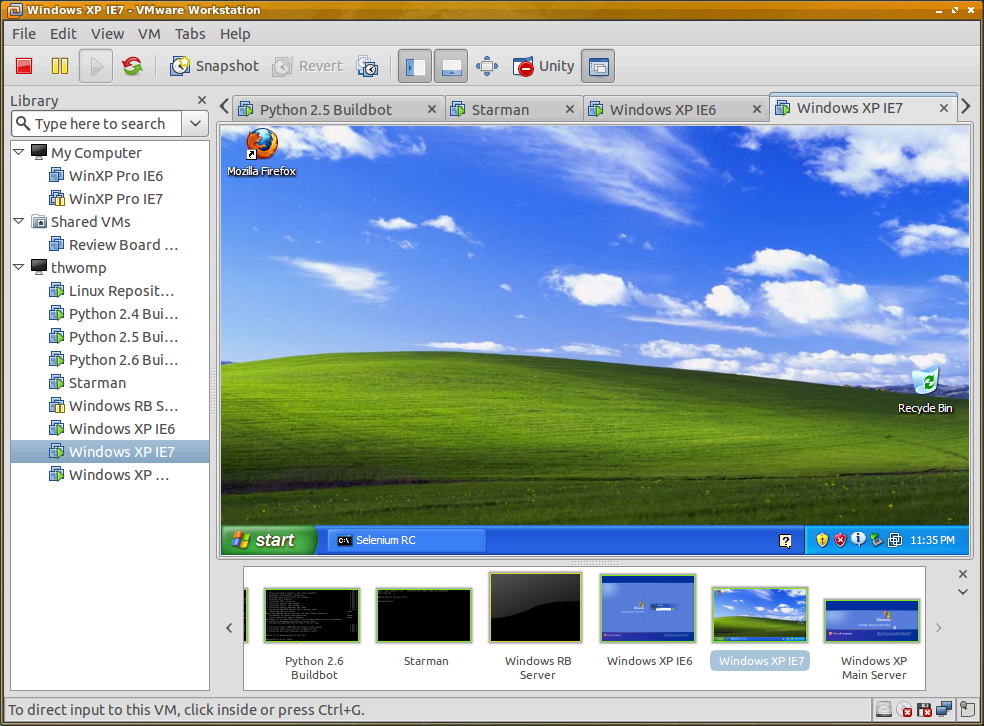

- Pressing Control-Alt-Delete now actually sends that to the VM. Sorry for all of you who couldn’t log into Windows.

- WSX no longer disconnects when updating the screen resolution fails.

- If you connect to multiple servers, the inventories should be correct on each. Previously, they’d sometimes show the wrong server’s inventory.

New Bugs

- Occasionally, the screen may stop updating. We’re looking into that. In the meantime, there’s a Reload button you can press to re-establish the connection to the VM’s display.

What next?

I can’t give away all my secrets, but we’re looking into better ways of handling input in the guest (especially with touch devices), and making WSX a bit more scalable. We’ll continue to put out Tech Previews of WSX while it matures.

In the meantime, let us know how it’s working for you.

VMware WSX TP2: Faster, Shinier, and Less Broken Read More »